# Lab 2 - Using Jaeger

In the second laboratory of the workshop, we will install HotROD (Rides on Demand) (opens new window) is a demo application that consists of several microservices and illustrates the use of the OpenTracing API. Afterwards, we will review most of the Jaeger features.

We will perform the following tasks:

- Install HotROD in K8s.

- Use the Jaeger UI

- Obtain the data flow of an application.

- Searching the source of a bottleneck using Jaeger.

# 1. Installing HotROD

Install HotROD:

kubectl create namespace hotrod K8S_INGRESS_IP=$(kubectl get -n observability ingress -o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}') kubectl apply -f - <<EOF apiVersion: apps/v1 kind: Deployment metadata: labels: app.kubernetes.io/component: app app.kubernetes.io/instance: hotrod name: hotrod-app namespace: hotrod spec: replicas: 1 selector: matchLabels: app.kubernetes.io/component: app app.kubernetes.io/instance: hotrod app.kubernetes.io/name: hotrod-app template: metadata: labels: app.kubernetes.io/component: app app.kubernetes.io/instance: hotrod app.kubernetes.io/name: hotrod-app spec: containers: - env: - name: JAEGER_AGENT_HOST value: jaeger-workshop-agent.observability.svc.cluster.local - name: JAEGER_AGENT_PORT value: "6831" image: jaegertracing/example-hotrod:latest imagePullPolicy: Always args: ["all", "-j", "http://$K8S_INGRESS_IP"] livenessProbe: httpGet: path: / port: 8080 name: jaeger-hotrod ports: - containerPort: 8080 readinessProbe: httpGet: path: / port: 8080 --- apiVersion: v1 kind: Service metadata: labels: app.kubernetes.io/component: service app.kubernetes.io/instance: hotrod name: hotrod-service namespace: hotrod spec: selector: app.kubernetes.io/component: app app.kubernetes.io/instance: hotrod ports: - protocol: TCP port: 8080 targetPort: 8080 EOFEnable port forwarding:

kubectl port-forward -n hotrod service/hotrod-service 8080:8080Access HotROD, the application is available at http://localhost:8080/ (opens new window)

# 2. Using HotROD

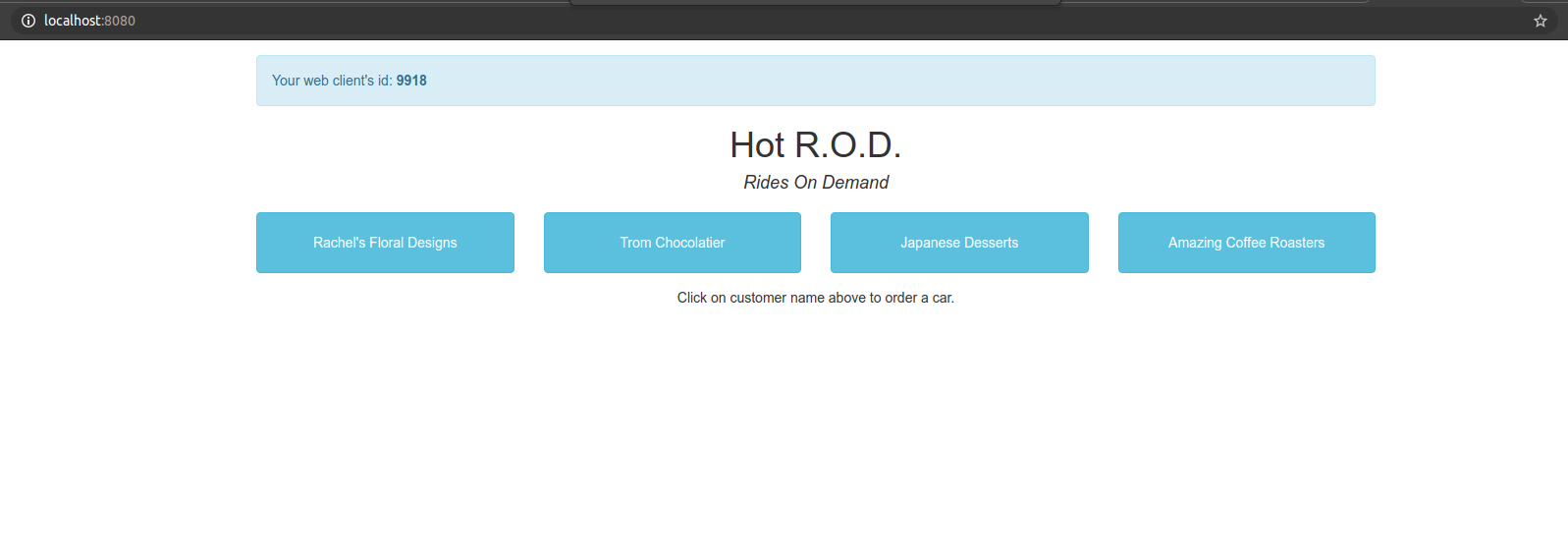

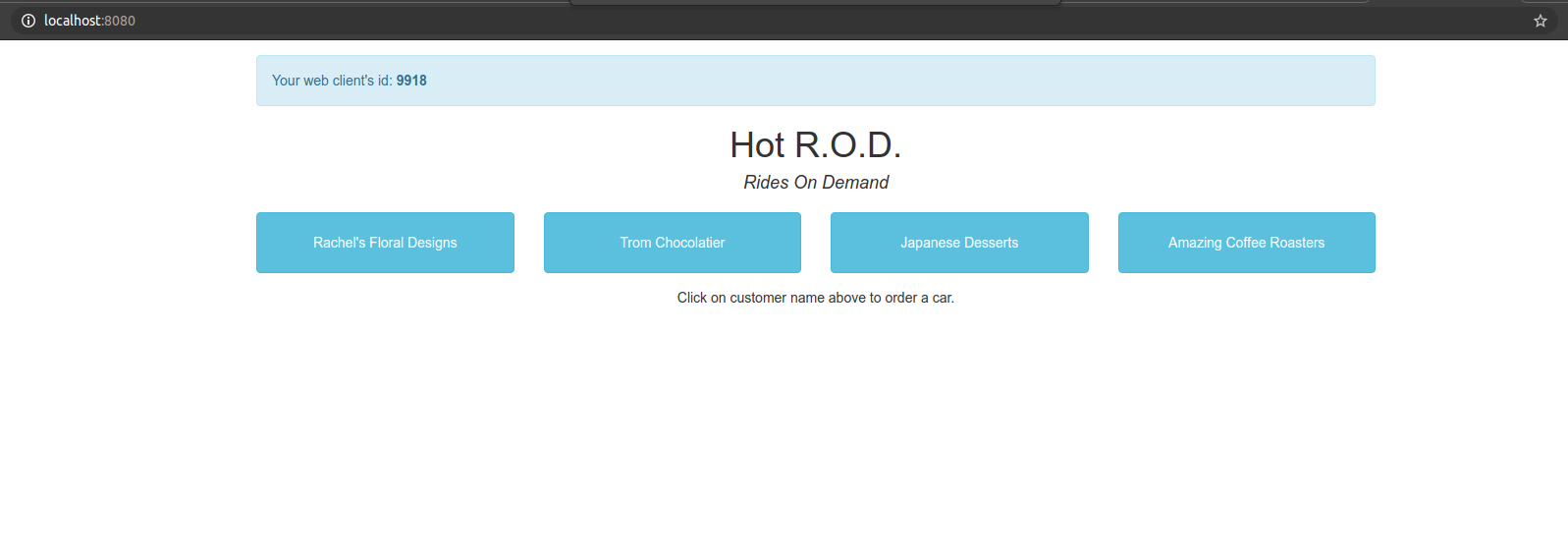

HotROD is a very simple "ride sharing" application.

In the home page we have four customers, and by clicking one of the four buttons we send a car to arrive to the customer’s location. Therefore a request for a car is sent to the backend, it responds with the car’s license plate number and the expected time of arrival:

There are a few bits of debugging information we see on the screen.

- In the top left corner there is a

web client id: 9323. It is a random session ID assigned by Javascript UI; if we reload the page we get a different session ID. - In the line about the car we see a request ID

req: 9323-1. It is a unique ID assigned by Javascript UI to each request it makes to the backend, composed of the session ID and a sequence number. - The last bit of debugging data,

latency: 782ms, is measured by the Javascript UI and shows how long the backend took to respond. - This additional information has no impact on the behavior of the application, but will be useful when we look under the hood.

# Architecture

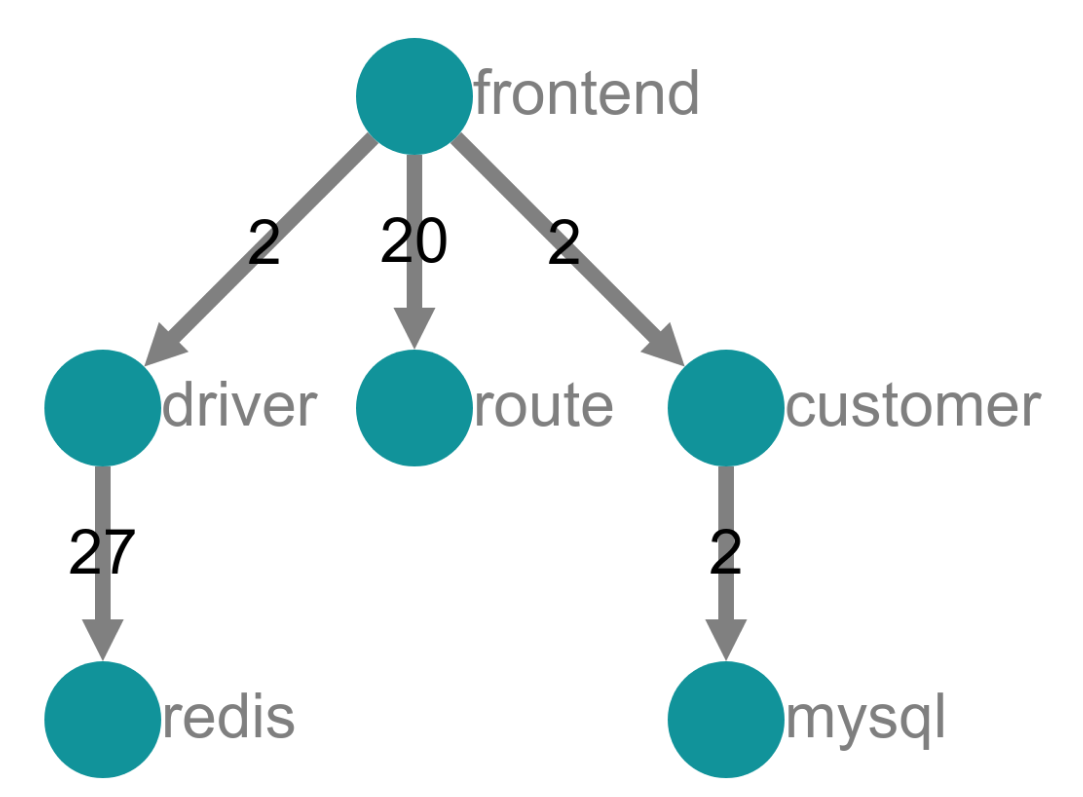

Hotrod is a very simple application composed of 6 microservices:

- 1 frontend microservices.

- 3 backend microservices which are called by the frontend.

- 2 storage backends.

The storage nodes are not actually real, they are simulated by the app as internal components, but the top four microservices are indeed real.

# 3. Understanding the Data Flow of an application

One of the main advantages of Distributed Tracing is that we are able to track a request through a software system that is distributed across multiple applications, services, and databases as well as intermediaries like proxies.

So, let’s find out the data flow of HotROD.

- Access HotROD (http://localhost:8080/ (opens new window)) and click several times in some of the customers.

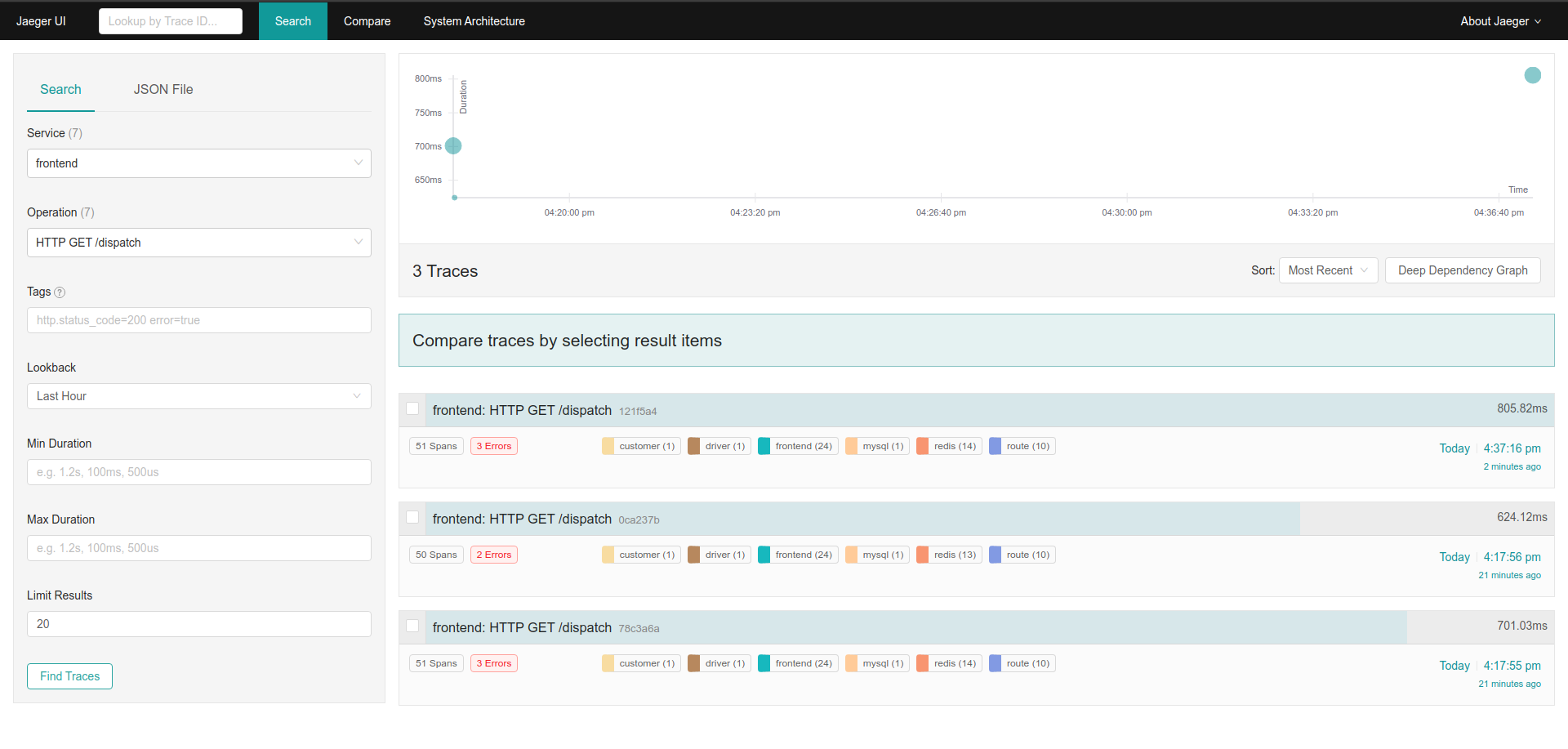

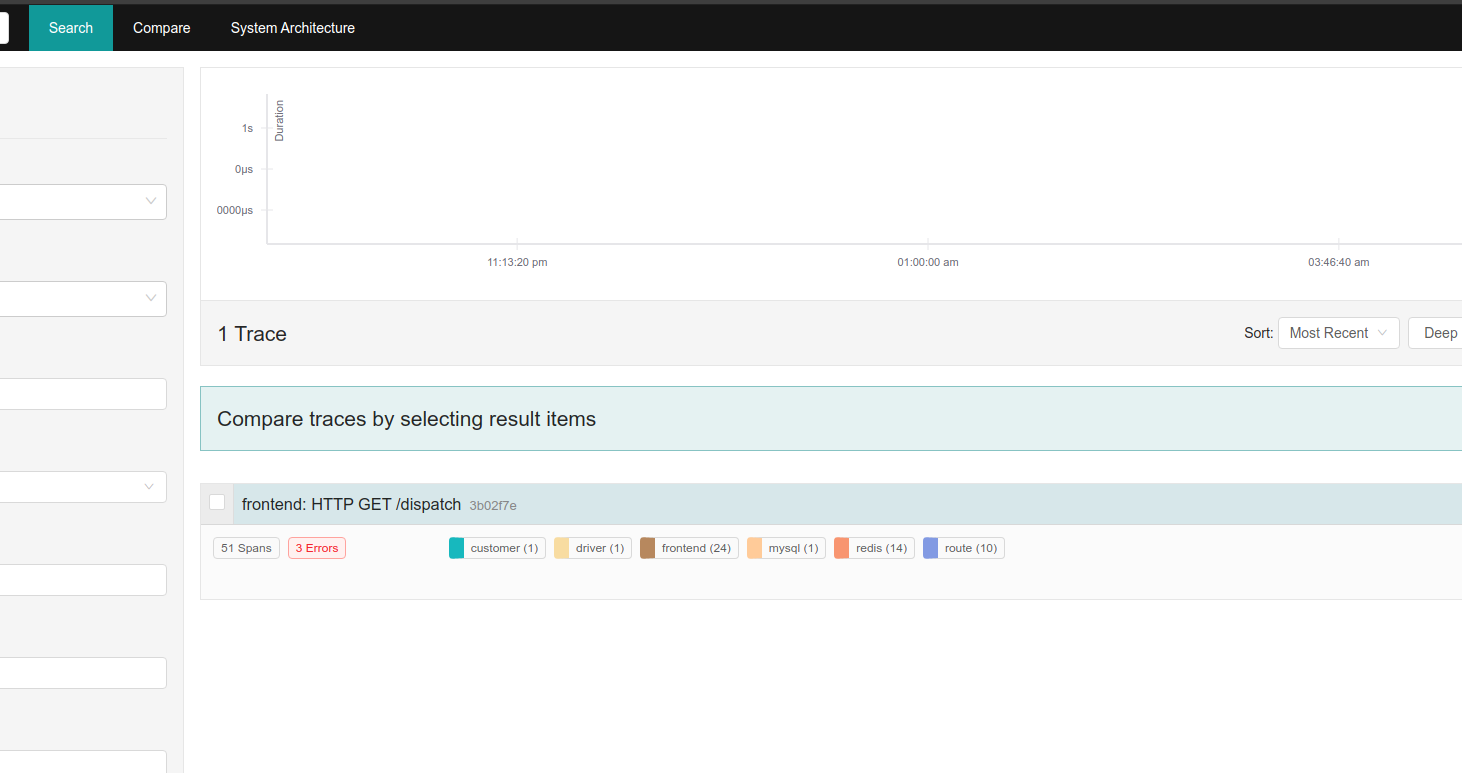

- Go to the Search page in the Jaeger UI.

- Select

frontendin the Service drop-down list because is our root service andHTTP GET /dispatchin the Operation drop-down list to filter the dispatch action. - Click Find Traces.

- Next, click in one of the traces on the right side.

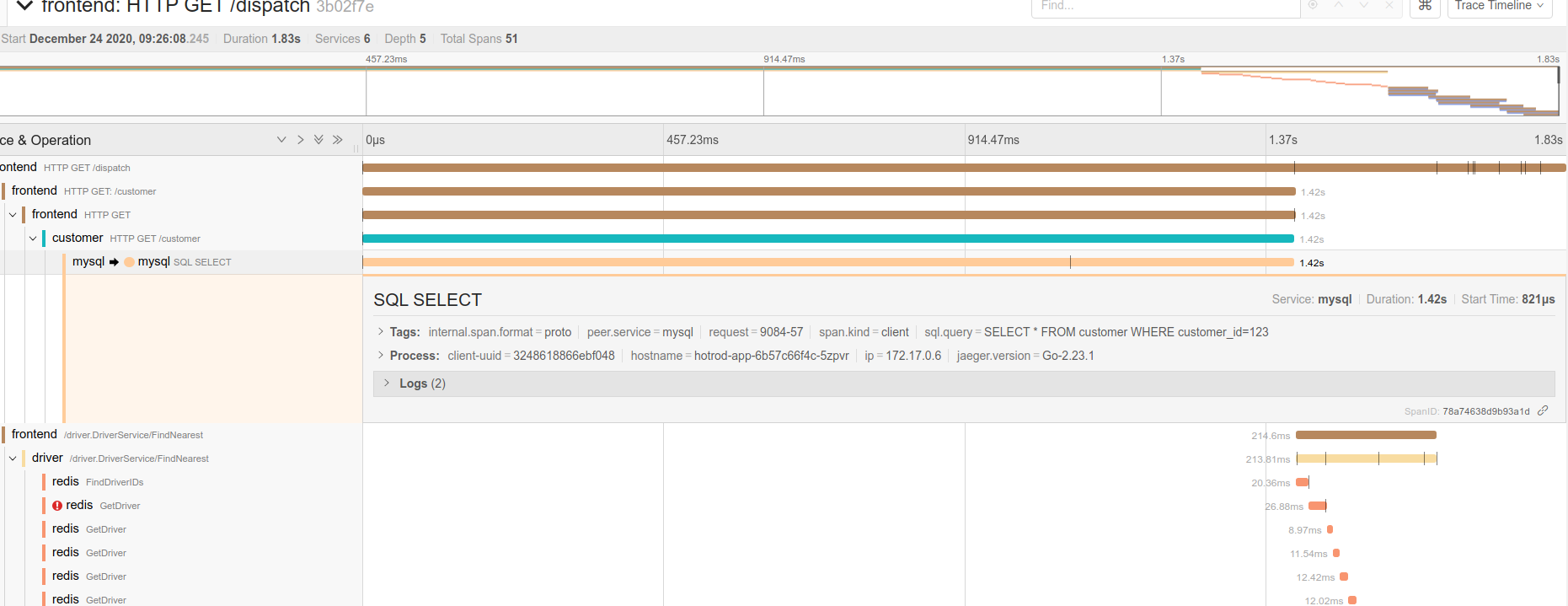

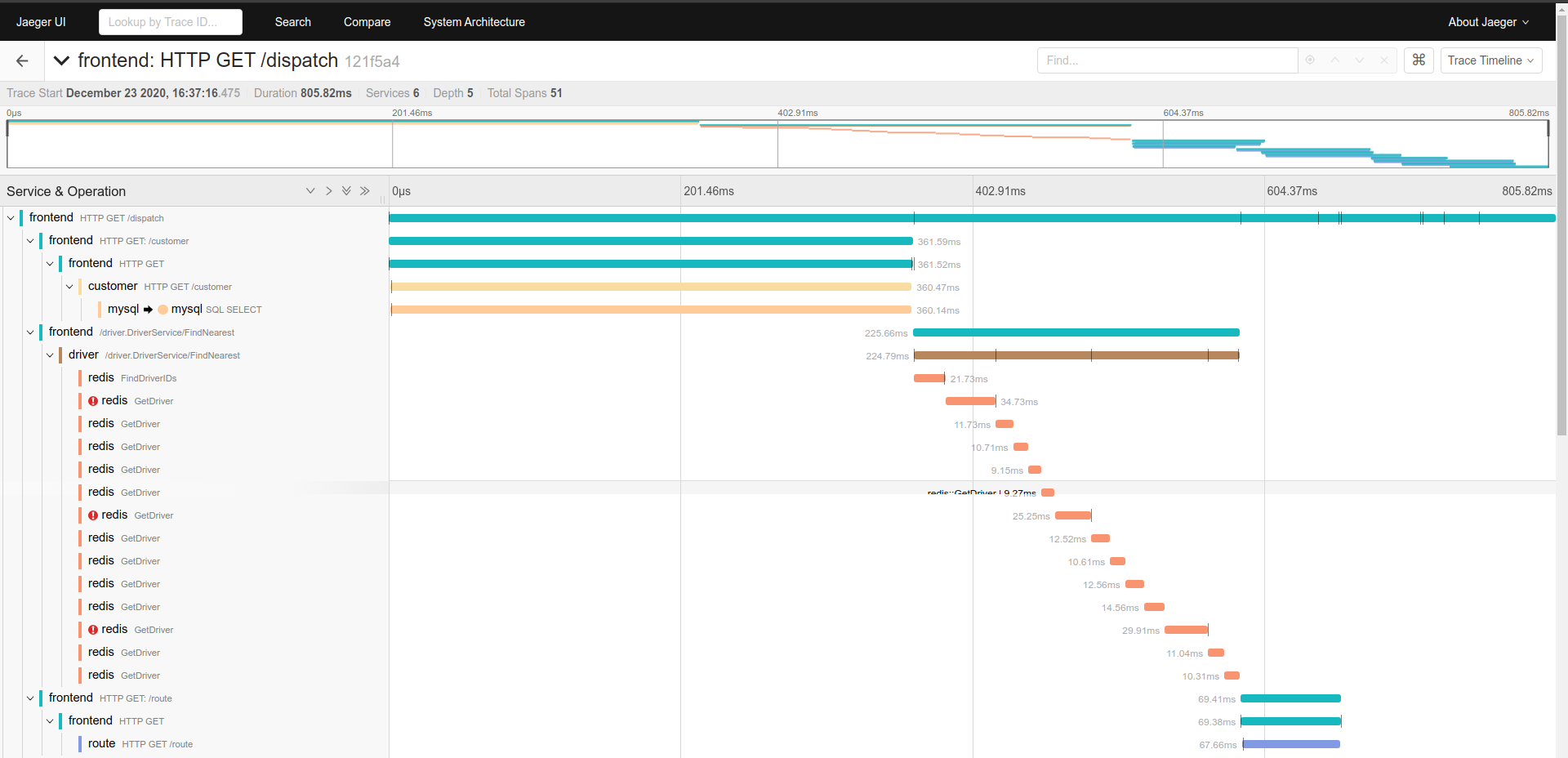

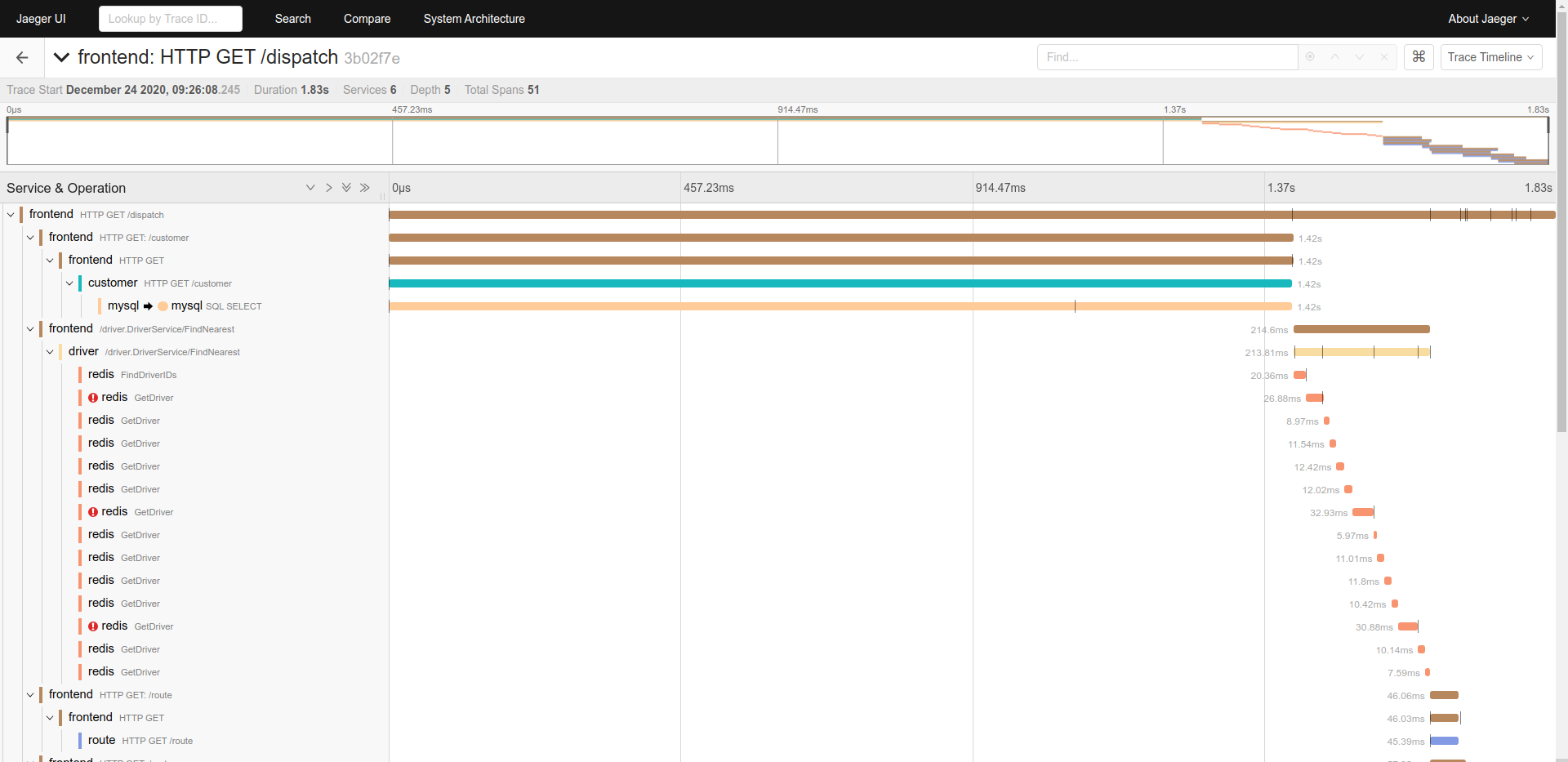

Now, Jaeger showS the trace of one of our request and displays some meta-data about it, such as the names of different services that participated in the trace, and the number of spans each service emitted to Jaeger.

- The top level endpoint name is displayed in the title bar:

HTTP GET /dispatch. - On the right side we see the total duration of the trace. This is shorter than the duration we saw in the HotROD UI because the latter was measured from the Browser.

- The timeline view shows a typical view of a trace as a time sequence of nested spans, where a span represents a unit of work within a single service. The top level span, also called the

rootspan, represents the main HTTP request from Javascript UI to thefrontendservice, which in turn called thecustomerservice, which in turn called aMySQLdatabase. - The width of the spans is proportional to the time the operation takes. It also represent the time waiting for other services calls.

Using that information we can generate the data flow of the request:

- A

HTTP GETrequest are sent the to/dispatchendpoint of thefrontendservice. - The

frontendservice makes a HTTP GET request to the/customerendpoint of thecustomerservice. - The

customerservice executes an statement in theMySQL DBand those results are sent to thefrontendservice. - The

frontendservice makes agRPCrequestDriver::findNearestto the driver service. - The

driverservice makes several calls toRedis. Some of them are failures. - The

frontendservice executes many ofHTTP GETrequests to the/routeendpoint of therouteservice. - The

frontendservice returns the result.

# 4. Searching the source of a bottleneck

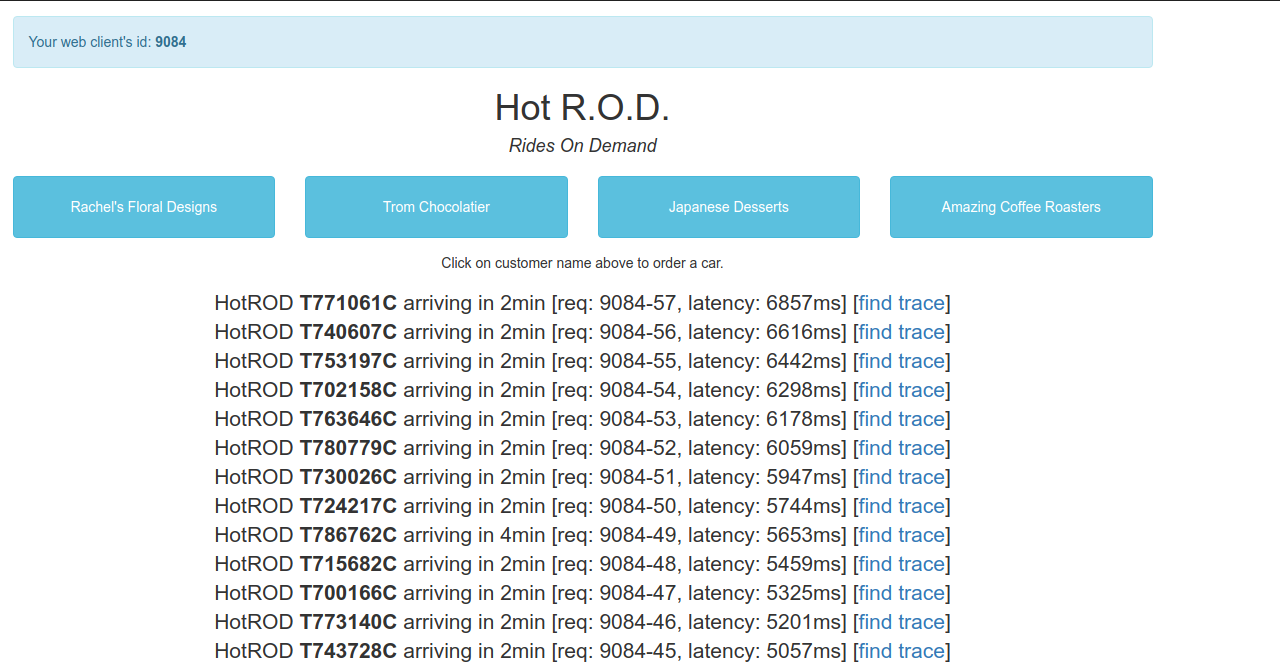

Another cool feature of Distributed Tracing is that using its information we can find easily the bottlenecks of our system. In order to do that we are going to stress the system making so many calls and using Jaeger we are going to find out the root of the problem.

- Access HotROD (http://localhost:8080/ (opens new window)) and click multiple times on the customers until the latency goes up very sharply.

- Click in the first find trace link which it will take us to see the trace of the request in Jaeger.

- Next, click in the

HTTP GET /dispatchrequest to see a detailed view of its trace through the system.

Now, we are able to see what going on in the system:

- As we mentioned before, the actual processing time is different that we saw in the HotROD web page. This is because the server is not able to handle all the request in time and it has to put them in a queue even before any span is generated.

- The trace shows that the request was handle in almost 2 seconds.

- Reviewing all the span, we can detect that the

customerservice call tomysqlservice is generating more that 70% of the processing time. This is our most promising bottleneck candidate. - Finally, we can click in the

mysqlservice call to see more information about that specific span. Thanks that the developers added a custom tagsql.querywe can identify very easily the SQL statement that is generating the problem.